ChipAgents RCA: Autonomous ASIC Root Cause Analysis

Executive Summary

Over 50% of frontend ASIC hardware engineering time is spent on debugging and root cause analysis, spent churning through millions of lines of code and terabytes of waveform data. Despite this, there are no existing solutions for autonomous root cause analysis that use both code and waveform data. ChipAgents Root Cause Analysis (ChipAgents RCA) is the first Agentic AI based approach to end to end bug resolution, using error logs and waveforms, over commercial scale IPs, and across multiple categories of high complexity bugs. We develop a waveform analysis engine purpose built for AI Agents, and a novel multi-agent prover-verifier based algorithm for bug tracing. ChipAgents RCA successfully uncovers complex bugs — including back-pressure issues, data corruption, and pipeline misalignment — in large IPs with complex protocols, multiple clock domains, and tens of thousands of lines of HDL. The first-of-its-kind solution unlocks thousands of engineering hours per year per team, freeing up teams to complete design implementation verification closure dramatically more quickly, and ultimately reduce time to tape-out for state of the art SoCs.

ChipAgents AI

ChipAgents AI is the market leader in Agentic AI for hardware design and verification. We were founded out of UC Santa Barbara's NLP group — focused on fundamental AI research — and today ship our product and platform to over half of the largest twenty semiconductor companies globally, working with over 50 companies in total. We are backed by Bessemer Venture Partners, industry strategics including Micron, MediaTek, and Ericsson, and have multi-million ARR. ChipAgents is used daily in production by hardware engineers to design and verify next-generation SoCs by generating HDL, DV collateral, analyze specifications, generate test plans, and debug failing simulations.

Introduction

Debugging hardware is an exercise in patience and attention to detail at the largest scales of human comprehension. Hardware engineers are tasked with extending and verifying decades old IPs, with tens of thousands of lines of code, and hundreds of pages of specifications. When tests fail, they're met with noisy log files and waveform databases that exceed gigabytes of compressed signal changes. Hardware presents serious challenges for correctness as well — designs are necessarily highly parallel, asynchronous, and data is always mutable. As a result, bugs manifest frequently, tests fail by the hundred, and the root causes tend to be subtle. Hardware engineers are left with little choice than meticulously understanding a block and its specification. Even engineers experienced with a block will spend hours or days scrubbing through waveforms, cautiously tracing a failure.

Root cause analysis is fundamentally a search problem, a class of computer science problems where the space of possible solutions — in this case a proof of a root cause — is exponentially large in the input, but the verification of such a solution is comparatively easy. Other famous search problems include boolean satisfiability (the backbone of formal verification engines), combinatorial optimization, and chess. Root cause analysis differs from these other problems, however, in that it necessitates the fuzziness of human intuition. An engineer will have to bridge the spec, design, golden reference, and waveform, in order to determine exactly where the symptom originated, and if that was an error in the design, spec, or testbench.

Today, ChipAgents has built the first system to automate the aforementioned process.

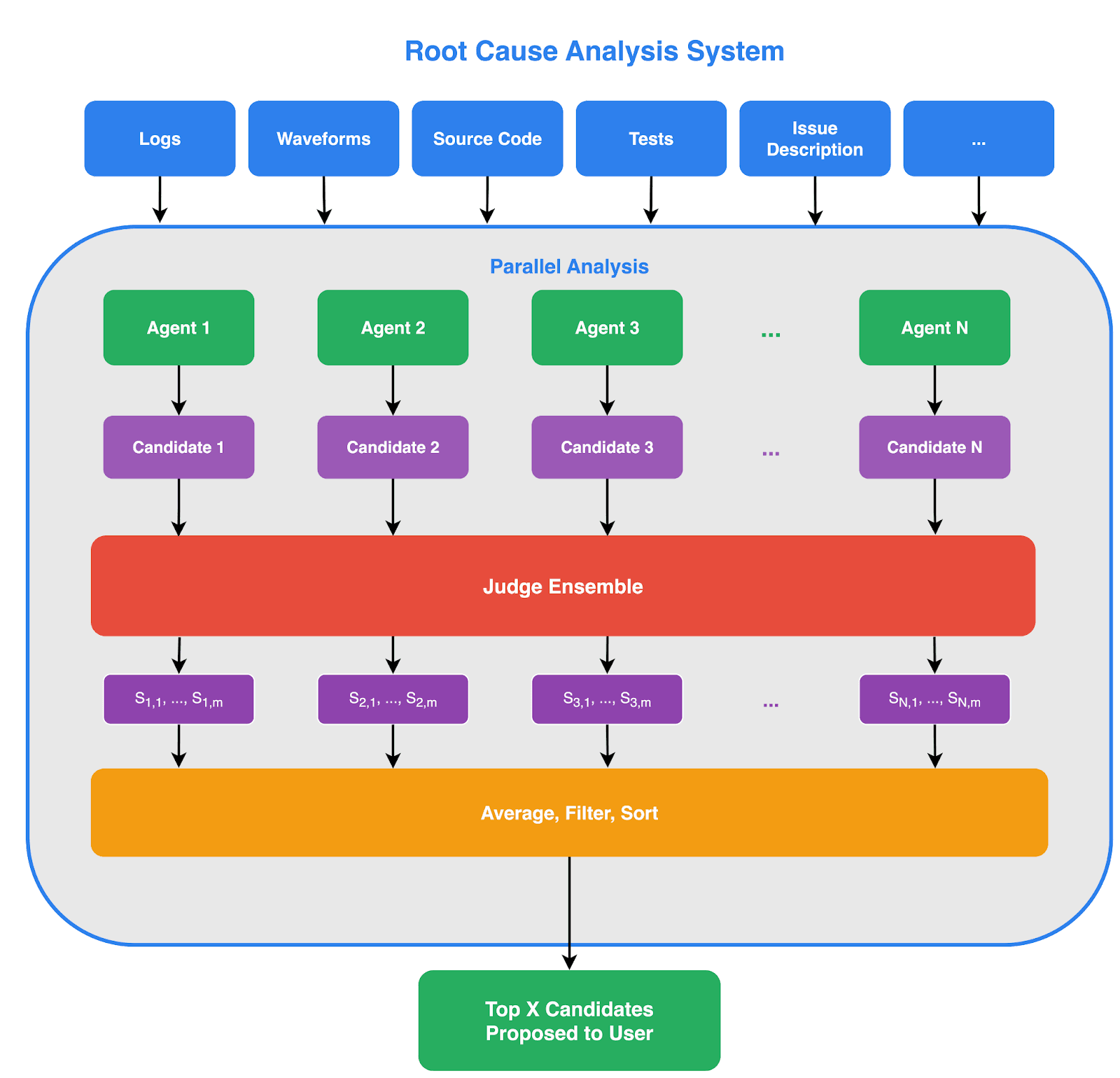

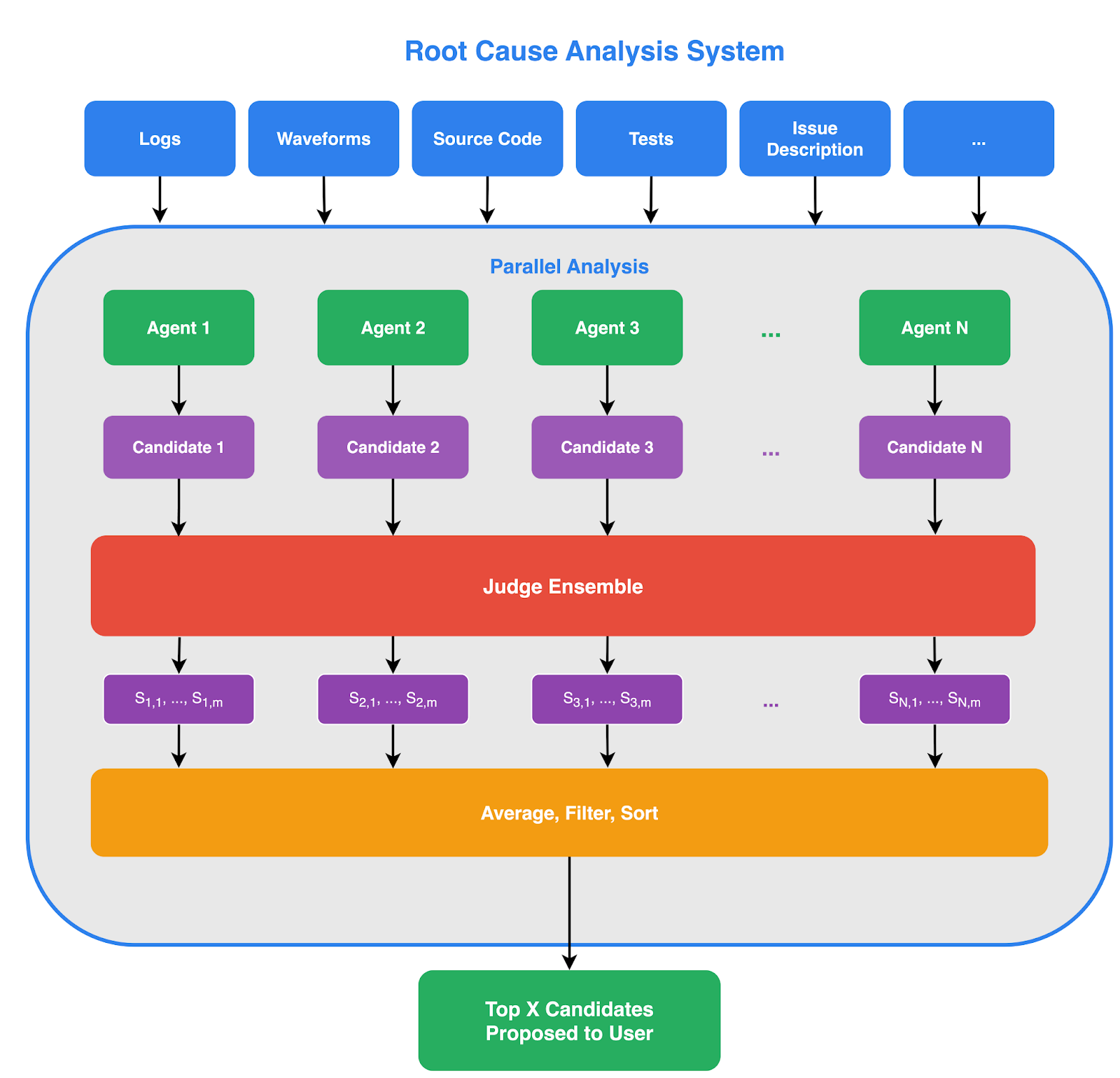

ChipAgents RCA is a multi-agent autonomous root cause analysis system that analyzes designs, testbenches, logs, and waveform databases to automatically trace a failure back to its origin, and suggest a fix for the issue. We have developed a first-of-its-kind waveform understanding engine — purpose built for AI Agents — and a novel multi-agent prover-verifier algorithm to trace hypotheses in parallel. ChipAgents RCA is the first system to adequately understand non-trivial waveform dumps, and uses its shared understanding of code and waveforms to trace real errors in commercial grade IP.

Our approach resolves defects at verification time 3x more frequently than the state of the art software AI Agent, hugely outperforming in every class of bug and IP. The results translate into real utility for human engineers: we conduct a case study on a large IP and find that ChipAgents RCA resolves errors over 12x as quickly as a human design engineer.

We improve the human-agent interface of ChipAgents RCA through a confidence binning system: multiple candidates are proposed to the end user, ranked by confidence, to improve precision.

We conclude with a discussion of AI Agents in general, and how ChipAgents RCA represents a less explored direction in the space of AI Agents.

Methods

ChipAgents RCA combines three key components: a waveform understanding engine, a multi-agent prover–verifier loop, and a self consistency ranking system. Together, they make it possible for our AI Agent to trace hardware failures from waveform data without human input. The self consistency layer provides confidence scores, particularly useful for human legibility. Finally, the asynchronous execution makes it a natural fit for CI/CD integration. We cover this extension in more depth in the Discussion section.

Waveform Understanding Engine

Traditional simulators produce massive waveform databases of hundreds of thousands of signals over millions of cycles. While humans can rapidly scan over the data and discard irrelevant information, LLMs are more constrained. To make a judgement about the utility of data, it has to sit within a restricted context window. Hence, we reframe the problem as searching for data instead of pruning it. Our waveform engine creates a structured index on top of compressed waveform data that enables an agent to ask high level questions about the signals. For example, the agent can request data like "where does this signal diverge after the 10th packet is sent?" and receive compact, symbolic answers — a far better fit for LLMs' limited memory.

We find that LLMs are not natively capable of making intelligent queries without tool specific post-training. After this iteration, we find that agents can successfully identify packets, handshakes, requests and grants, pipeline stages, and time of control signal changes quickly and efficiently.

Prover–Verifier Multi-Agent Loop

A good human debugger will be naturally skeptical of initial solutions, whereas agents are well known to be naively trusting. Instead of building backtracking and meticulous verification into the agent, we bring these components outside of the agentic loop. We separate the prover, responsible for a candidate hypothesis, from the verifier, responsible for checking its validity. To thoroughly explore the hypothesis space, we run several parallel prover-verifier pairs, which communicate as needed when new insight is found. We repeat this process iteratively, until a convergence threshold is reached. The multi-agent architecture forms a distributed depth-first search, naturally aligning with the debugging process, where many paths exist yet few are correct. We observe that performance scales reliably with increased horizontal scaling.

Self-Consistency and Confidence

The final layer aggregates results across agents to estimate confidence, surfacing high-agreement explanations first. Each prover-verifier pair submits a final argument for confidence to a final aggregator. The aggregators cross reference multiple solutions, and finally provide a score to each trace. We find that this last step strongly improves the precision of the system, with high confidence rated solutions having strong positive predictive power. This addresses a universal concern amongst the use of agentic systems, that it's not clear if a proposal is to be trusted. By adding correct calibration to ChipAgents RCA, we save the end user time when we determine high confidence solutions, and spare them a wild goose chase with low confidence outputs.

Performance

ChipAgents RCA successfully identifies complex defects in designs under test (DUTs) up to multiple tens of thousands of lines of code, using waveform databases in excess of 1GB compressed (north of 100GB of uncompressed signal changes). Our dataset focuses on the case where there is a faulty design, with the failure caught by the testbench. The cases are representative of commercial scale IP level debugging tasks and moderate complexity. We provide detailed information on our dataset composition, results, and a comparison against human performance.

Dataset Composition

We evaluate ChipAgents RCA over a matrix of IPs and bug types:

IP types:

- Common protocols

- Bus fabrics

- RISC-V Cores

- Complex, known protocols (DDR, PCIe)

- Arithmetic units

Bug categories:

- Backpressure

- Data corruption

- Invalid protocol usage

- Handshake errors

- Uncovered state machine transitions

- Incorrectly gated control flow

Additional miscellaneous IPs and bugs are included, but not mentioned above.

Each IP is equipped with a testbench, and the known bug is inserted into the design such that the testbench fails with an error specified in the logs. Qualitatively, logs tend to be highly noisy, with opaque assertion failures, similar to in commercial settings.

We provide proxies for complexity below for the median and 95th percentile test cases:

| Proxy | Median | 95th Percentile |

|---|---|---|

| Lines of Code | 5,000 | 100,000+ |

| Clock Domains Involved in Bug | 2 | 6 |

| Protocols Involved in Bug | 2 | 4 |

| Indirection from Testbench to Failure | 4 steps | 10+ steps |

The dataset comfortably contains moderate complexity IP level issues encountered at the commercial scale.

Results

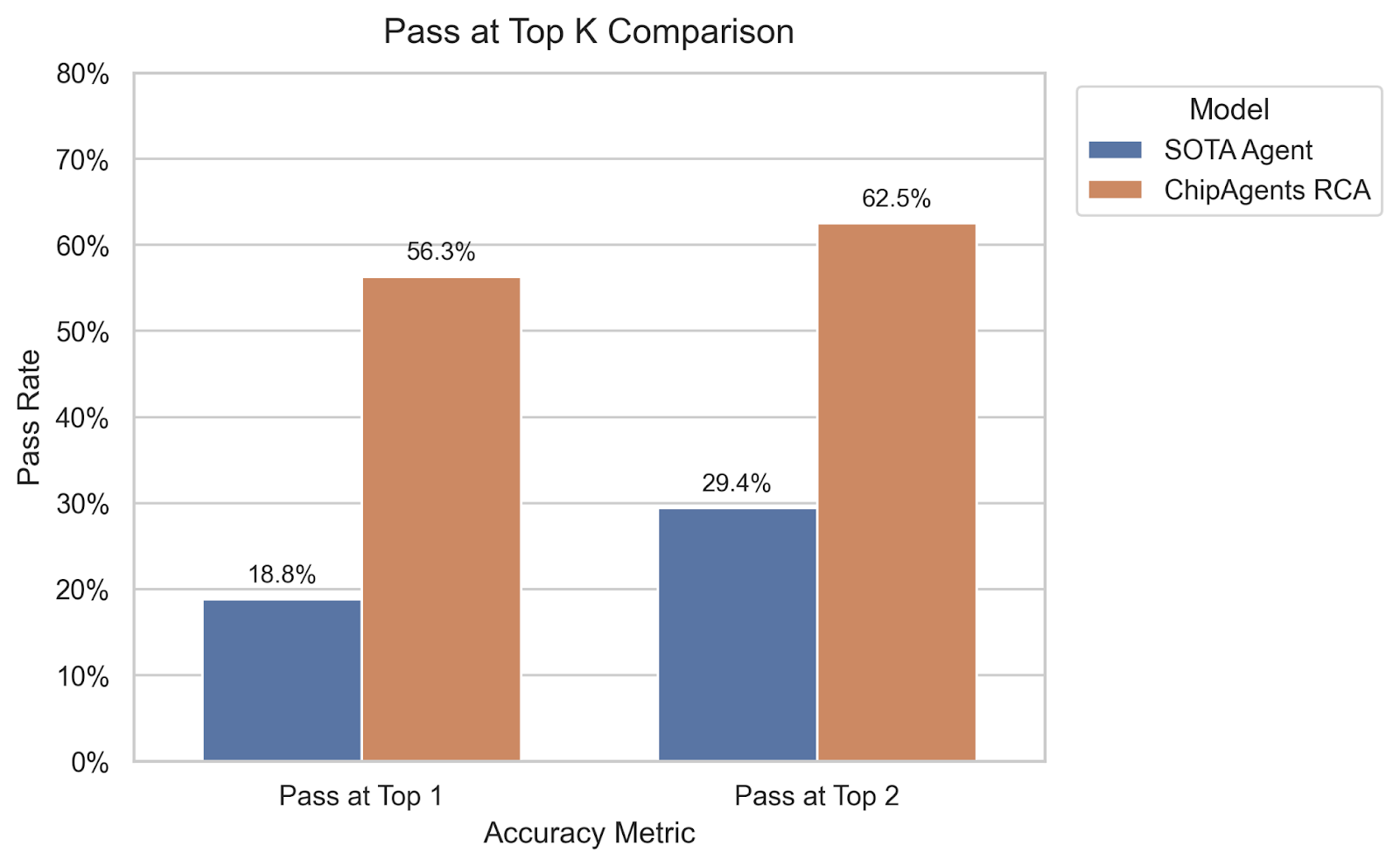

Over our entire debugging dataset, we achieve 56.3% accuracy for our highest rated output and 62.5% with our top two outputs. This is compared to 18.8% and 29.4% respectively for the most widely used state of the art software programming agent. This is roughly 3x the performance at pass at one, relative to generic solutions.

Case Study

Our dataset is generally very challenging. State of the art systems perform extremely poorly, and our purpose built solutions are roughly at the halfway point. To better understand how these results translate into real world performance, we perform a case study against a human baseline.

We consider a subtle off-by-one error in the AXI of a PCIe 3.0 IP. The IP measures at 36k lines of code, and the source of the error is four levels of indirection removed from the testbench. The tests fail, and print a number of errors and assertion errors out of a few thousand lines of logs. The waveform database is dumped. This case is representative of a typical moderate complexity commercial setting.

We provide both ChipAgents RCA and a human design engineer identical situations. The design engineer is mid-career, at a large fabless semiconductor company, and has had experience with protocols like AXI, PCIe, and processor IPs. They were unfamiliar with the specific block.

The human design engineer worked for two hours before giving up on the issue, predicting that it would take between 4 to 8 hours to completely finish. They isolated the correct file where the bug was contained, but were unable to determine the specific cause. ChipAgents RCA worked for 10 minutes, and isolated the precise fix including a correct code patch.

This represents at minimum a 12x speed improvement relative to a human baseline.

Discussion

We analyze ChipAgents RCA's performance relative to generic AI Agents and human baselines, the limitations of our evaluation, and avenues to improve human-agent interaction over long asynchronous processes.

ChipAgents RCA strongly outperforms the state of the art for generic AI Agents on hardware debugging. On our dataset, ChipAgents RCA is 3x as effective as the leading software AI Agent. In real case studies, we find that this translates effectively to real world IP level debugging tasks.

We note that ChipAgents RCA is predominately tested at the IP level, instead of the more common and complex sub-system level. We additionally consider numerous avenues to improve human-agent interfaces for long running asynchronous processes, like RCA. Finally, we discuss the implication of AI Agents as applied to search problems compared to typical code generation tasks. We highlight how search problems are a natural fit for Agentic AI, and have the potential to scale much better than existing Human-Agent interactions.

Utility to Engineers

The utility of any system to human engineers is a function of the duration of execution, the rate of correctness, and the change in performance with respect to design complexity.

ChipAgents RCA runs for a long time, upwards of half an hour for complex cases, relative to many existing agentic flows. However, this is still substantially less than human engineers take to debug moderate complexity issues. We saw in our PCIe 3.0 case study upwards of a 10x time improvement. Additionally, since the process is entirely asynchronous, and easily batched for many parallel bugs, the end to end latency is not substantially exposed to the end user.

RCA mode succeeds over medium complexity defects in complex hardware IPs. However, we note limitations in transfer to the full complexity of the commercial domain. Notably, our customers report that the median design and verification engineer operates at the sub-system level — where multiple IPs are knitted together to achieve the underlying spec. Our dataset is limited to IPs.

That said, we note the following characteristics about our dataset:

- Nearly all bugs occur at the handoff between two modules at a handshake, protocol, or buffer

- Bugs frequently originate when crossing clock domains

- Our test data contains very large IPs, including full cores As a result, while we do not test over a full subsystem or SoC, we have confidence that ChipAgents RCA will be able to resolve errors of the kind enumerated above, which includes many of those that are found at the subsystem level.

ChipAgents RCA has achieved hugely improved performance compared to the state of the art, but there's a huge amount of progress that remains to be made. The most complex and subtle bugs in our dataset remain elusive to all systems. Our human baselines reveal the utility of the existing system more plainly — non-cherry picked bugs that ChipAgents RCA does catch are indeed complex, and take significant time for a human engineer to resolve. Finally, even when ChipAgents RCA fails to identify the exact cause of a problem, it will provide a partial trace, or suggestion of the error. Our evaluation is binary, either correct or incorrect, and does not take into account the utility of partial traces. Hence, ChipAgents RCA can be usefully applied to noteworthy bugs, even if not every bug can be identified exactly, and will degrade gracefully when met with particularly challenging cases.

Considering ChipAgents RCA's short runtime relative to human effort, its ability to find errors of significant complexity, and graceful degradation in case of failure, RCA mode makes for a powerful engineering tradeoff. To be able to spin off an autonomous process that has a strong likelihood of resolving errors quickly, or at least pointers to the ultimate solution, is a net win in expectation.

Human-Agent Interface

Most generative AI applications run within a "chat loop", with fast generation times and low level supervision by a human user. ChipAgents RCA, however, runs asynchronously and autonomously. Further, hardware engineers tend to tackle hundreds of failure bins at a time. These suggest using RCA in batch mode would be far more effective, with the ultimate goal of automatically triaging test failures after nightly or weekly regression runs by the hundred.

This is a natural evolution of the generative AI interface: once a process can be run frequently and in parallel, the manual human effort of starting and executing the tool becomes the bottleneck. To continue achieving productivity improvements, we move the execution to the point of test failure, removing the busy work of translating test bins to the RCA mode all together.

With all of the above said, it's still unclear how to best merge human and AI strengths in root cause analysis. Humans have intuition and experience, and may be able to provide hints to the autonomous system when it fails to converge. The agents have admirable patience and work ethic, but lack an engineer's knowledge of the design and domain expertise. It's very natural to compare the process to RTL place and routing software, where the P&R engine provides the brute force constraint solving, and the PD engineer constrains the system to improve convergence. We elaborate on this analogy over the remaining sections.

Conclusion

ChipAgents RCA is a first-of-its-kind system to remove the necessity of human based debugging from the hardware flow. Fundamental enhancements like this are relatively rare, with the last serious such case being Synthesis and Place & Route — which eliminated the need for humans to manually convert designs into gates or to place them on a chip. What was previously extremely time intensive became the effort of 1-2 engineers per sub-system.

Modern chip design is unthinkable without synthesis and P&R tools, and we do not suggest that ChipAgents RCA is as revolutionary as these two innovations. However, the developments of automatic root cause analysis have parallels. As synthesis freed chip designers from manual net-list conversion 30 years ago and has been indispensable ever since, we look forward to the future where automatic root cause analysis can free a generation of design engineers from manual debugging.

Working with the ChipAgents Team

ChipAgents is at the forefront of the largest revolution in EDA since the RTL simulator. If you would like to help our team push the boundaries of what is possible in semiconductors, we're hiring for AI experts, and those at the intersection of AI and EDA. Apply on our careers page, or reach out to our team at our contact email to look for opportunities.