Do we need multi-agent debate in AI for chip design and verification?

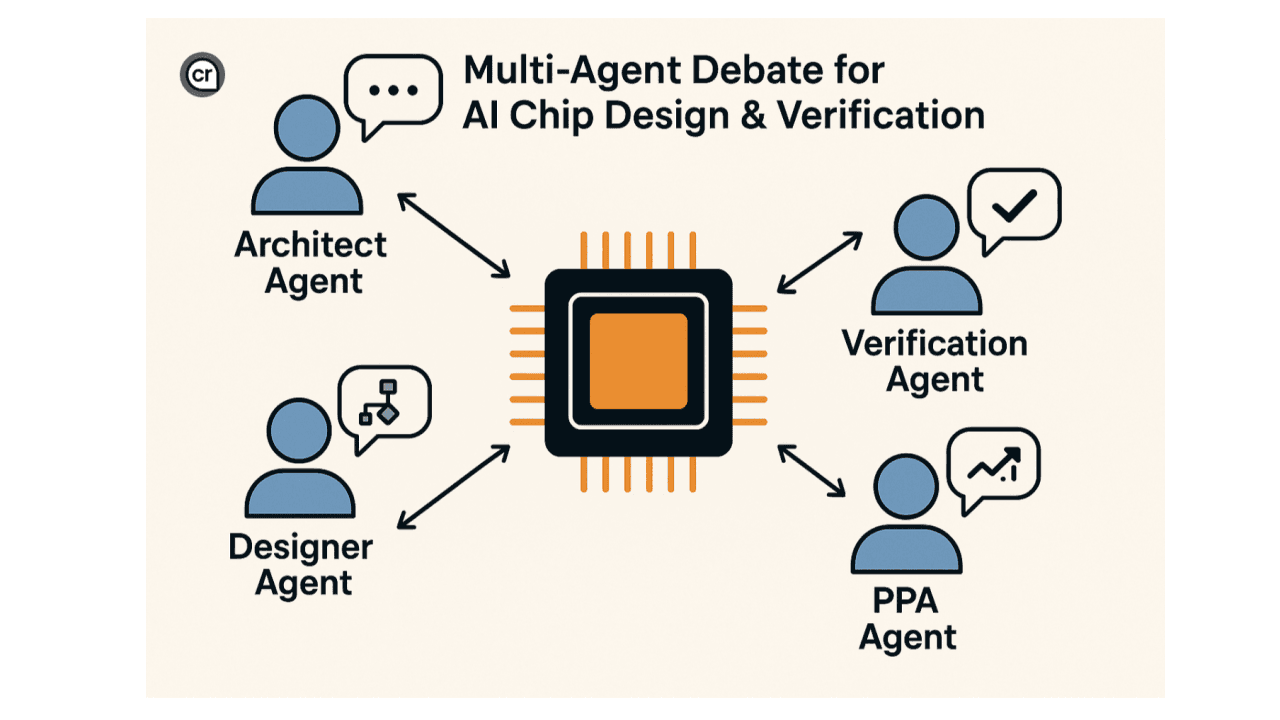

Multi-Agent Debate in AI for Chip Design & Verification

Here's a thought experiment for everyone building silicon with AI in the loop.

When humans design chips, we don't rely on one heroic engineer. We stage a series of structured disagreements. The architect argues for end-to-end intent, the RTL designer fights for implementability and schedules, verification engineers try to break the story with constraints, properties, and coverage holes. Physical design chimes in with PPA reality. DFT points out what we'll regret in bring-up. We go back and forth until the spec becomes something that survives adversaries.

So the question isn't "should AI design chips?" It's: can we make AI argue like we do, productively, with evidence?

The human analogy, made explicit

A good spec review feels like a debate club with lab reports. Someone proposes a microarchitecture. Someone else asks if the queues back up at corner cases. The DV lead turns those "what ifs" into assertions and tests. The team doesn't agree by vibe; they converge because simulation traces, formal counterexamples, timing reports, and area estimates settle disputes. Debate + oracle.

That's the pattern to copy for AI: multiple agents with different priors, grounded by oracles that don't care about opinions (sims, formal checks, STA, synthesis, coverage, PPA).

Do AI agents need multi-agent debate?

Not always. But wherever the search space is huge, requirements are fuzzy, and failure modes are adversarial, multi-agent beats solo every time. Chip design hits all three.

A single model can draft an RTL block from a well-specified interface, or generate boilerplate UVM sequences, or convert English to SVA using known templates. These are narrow, mostly-deterministic transformations. One "focused" agent with tools is faster and has fewer degrees of freedom to wander.

The moment we leave the happy path, ambiguities in the protocol, tradeoffs between latency and area, a DV plan that must anticipate unknown unknowns, structured disagreement creates value. One agent proposes, another criticizes, a third reduces the argument to measurable claims, and a judge agent consults the oracle (run the sim, run the formal engine, compile the properties, check coverage deltas). The cycle repeats until evidence accumulates.

How to think about it for design and verification

Treat each role we respect in human flows as an agent "hat" with a tool belt and a bias.

- Spec/Architect agent: synthesizes requirements, proposes interface contracts, generates executable specs (register maps, protocol timelines, SVA skeletons).

- Microarchitecture agent: explores pipeline/queue/arbiter options; emits RTL sketches and performance hypotheses.

- DV agent: red-teams the design, expands properties, builds test plans, proposes coverage models, and generates adversarial stimuli.

- PPA/Implementation agent: evaluates synth/STA/area/power across corners; flags timing and congestion risks.

- DFT/Validation agent: enforces observability/controllability; checks scan, BIST, and post-silicon hooks.

The important bit: they don't share one brain. You want different failure modes. Let them argue in artifacts, not just text: RTL diffs, property sets, waveform annotations, coverage reports, synthesis summaries. Then give a judge agent the job we give Jenkins: run the flow and report evidence.

When single agent vs. multi-agent?

Use single-agent flows when:

- The task is well-specified, monotonic, and easily testable: generating CSR blocks from SystemRDL; converting English protocol rules into SVA templates; fixing lint/CDC warnings; creating UVM boilerplate; refactoring RTL for style; extracting coverage from a plan.

- Latency and cost matter more than creativity: quick script generation, small "glue" modules, testbench scaffolding.

Use multi-agent debate when:

- You need search and creativity: exploring microarchitectural variants, scheduling tradeoffs, arbitration policies, power gating strategies.

- You need adversaries: trying to break handshake corner cases, reorderings, starvation, metastability defenses, coherency edge cases.

- You need interpretation under ambiguity: incomplete specs, conflicting requirements, shifting PPA targets.

- You need risk surfacing: "What assumptions could sink tapeout?", have agents enumerate, attack, and quantify.

Do we need different tools?

Yes, two layers:

- Oracles and sandboxes (non-negotiable): simulators, formal engines, synthesis/STA, CDC/RDC, lint, power and thermal estimators, emulation/FPGA. If your debate can't call these, you're just trading opinions.

- Coordination fabric (the "workflow OS"): a planner to assign roles, a memory to track hypotheses and evidence, a router to schedule tool runs, a judge to score claims, and a guard to enforce constraints (e.g., "never skip CDC," "no RTL merges without property passes"). Think issue tracker meets CI pipeline, but for agents.

Complementary skills, not identical models

Don't hire five clones. Bias one agent toward spec clarity and decomposition. Bias another toward code synthesis speed. Give a third a paranoid DV mindset tuned to generate nasty stimuli and minimal counterexamples. Let the implementation agent be a pessimist with STA in hand. Their value is the correlated-but-not-identical perspectives. Diversity produces gradient. (Read our Head of Research Kexun's famous AI agent paper.)

The ensemble connection (boosting, weak learners, judges)

Multi-agent debate done well looks like boosting:

- Start with weak learners (agents that individually are imperfect).

- Each round, focus on the mistakes revealed by the oracle (failing properties, uncovered bins, timing violations).

- Reweight proposals toward the agents that fix those mistakes without breaking earlier wins.

- The judge is your loss function; the EDA tools are the ground truth.

- Over rounds, the ensemble converges to a design + verification plan with empirically lower error (fewer late bugs, better PPA, tighter coverage).

This framing keeps debate honest. It's not "who argued best", it's "who reduced loss on real metrics."

Pitfalls to avoid

Unbounded chatter: Without oracles in the loop, agents will hallucinate consensus. Every claim must be executable: a property to prove, a test to run, a netlist to analyze.

Metric myopia: If the judge only sees performance, it will ignore testability; if it only sees coverage, it may ship a slow, hot chip. Use multi-objective scoring: functional correctness first, then PPA, then schedule risk.

Spec drift: Agents that "fix" issues by silently changing intent are dangerous. Lock the spec as an artifact; require change proposals and evidence before edits.

A minimal playbook to try now

- Make the spec executable: interface contracts, timing diagrams, SVA skeletons, register maps. Agents debate through these.

- Set up the judge: one button that runs sim/formal/synth/STA/coverage on proposals and returns structured diffs and scores.

- Define roles and rounds: propose → criticize → test → score → iterate. Timebox rounds; archive artifacts; promote the winner.

- Gate merges on evidence, not persuasion: "No property regressions; coverage ↑; PPA within target; no new CDC issues."

The TL;DR that isn't shallow

You don't adopt multi-agent debate because it's fashionable. You adopt it because chips are arguments with physics, and arguments are won with experiments. Use a single agent for tight, local, deterministic work. Use a debating ensemble for open-ended, high-stakes decisions. Give them the same oracles your team trusts. And measure success the way we already do: fewer late bugs, faster convergence to PPA, and a DV plan that found the monster while it was still cheap.

If your AI can disagree with itself and then let reality pick the winner, you're not replacing engineers, you're giving them a sharper room to think in.